The Crucial Human Element: Why AI Alone Isn't Enough for Effective Communication

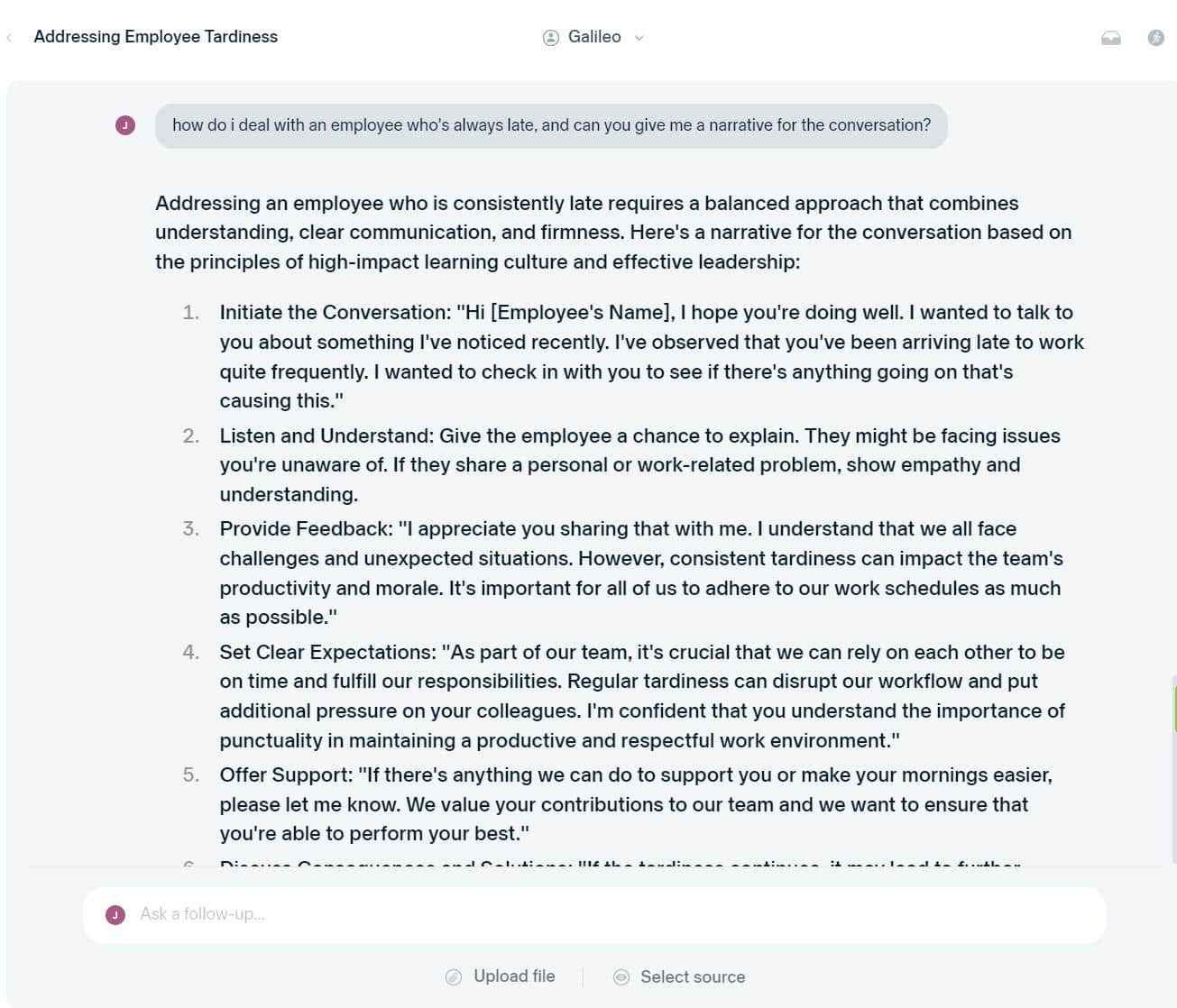

AI is increasingly permeating the world around us, but artificial intelligence is just that – artificial. It lacks a fundamental understanding of the humans it’s churning out information for. The below snippet from a Josh Bersin article is a great example of the limitations of AI in an L&D capacity.

The advice given by this Generative AI tool tells you nothing about who you are talking to and the best way to approach them with the subject based on their unique energetic makeup.

For a conversation like this to have real impact it is best broached by communicating with a person based on their highest energy or energies.

- For someone high in Excite, it will be important for there to be a connection made with them first. Just give them a chance to talk and make sure you really listen before jumping into any critiques.

- For someone high in Examine, make sure you have supporting data to back up your claim. Note when they’ve been late and give them real examples of the issue.

- The suggested dialogue given in the “Set Clear Expectations” section may work for someone high in Execute who places a lot of emphasis on workflow and productivity, but if someone were to try to speak to someone high in Explore in that way they would watch their eyes glaze over as all they would be hearing is “blah, blah workflow,” “blah, blah, punctuality,” “blah, blah, productive” in a robot voice.

Key takeaways:

- AI can be a very powerful support tool, but when it comes to people and people problems, it’s not ready to take the mainstage just yet.

- It is important to supplement your AI usage with tools that are designed to help you understand yourself and the people around you.